Is There AI beyond Chat GPT?

By Borys Stokalski, Bogumił Kamiński, Daniel Kaszyński

Abstract

This article discusses the need for policymakers, AI investors, and AI practitioners to see beyond current generative AI hype and retain a broad field of vision of AI, to avoid developing blind spots in areas that may become even more impactful than generative AI. The article explores the key drivers shaping the trajectory of AI growth, including monetization potential, regulation, and industrialization, and the challenges of principles/outcomes alignment. The article also discusses the CAST AI design framework, developed by the GPAI Future of Work working group, which offers support for conceptualization, evaluation and design of AI based products and solutions. The CAST model links capabilities such as generative insights or execution autonomy to design heuristics and patterns, as well as responsible AI principles that can provide support to solution architects and product owners in shaping their design decisions and backlogs in a way that leads to responsible, robust, and well-architected solutions.

Pervasive, Free, and Capable

The public launch of Chat GPT has become an inflection point in broad AI awareness. “Generative AI” pops up in every discussion about AI. And rightly so, as transformer-based chat and content generators are quite spectacular and persuasive demonstrators of what advanced algorithmics exploiting monumental data sets can achieve. Arguably, what makes these examples so spectacular is the fact that they represent capabilities considered paramount to human cognition: creative skills and “understanding” of complex, interrelated concepts.

The appeal is amplified by three facts: the publicly available services are super simple to use, offered usually as freemium subscriptions, and capable of delivering results that are more than good enough for anyone who can live with occasional “hallucinations” and has the patience to fine-tune prompts.

The upheaval in the public debate about the impact of AI on society and the economy can be easily skewed to reflect primarily the generative AI. These are the “lenses” frequently applied when discussing issues such as AI vs. IP rights, AI impact on jobs, AI explainability, or trustworthiness. However, generative AI in the form presented by Bard, DALL-E, or Chat GPT is not all there is to AI. Policymakers, AI investors, and AI practitioners must retain a broad field of vision of AI to avoid developing blind spots in areas that may become even more impactful than generative AI.

We need to pose some fundamental questions :

- What are the key drivers shaping the trajectory of AI growth, and how can they be addressed?

- In what directions and how far can AI-related innovations take us?

- What kind of solutions can emerge as AI techniques and tools mature?

- How are the public concerns and values related to the current and future evolution of AI solutions?

- How can these concerns be addressed by design, engineering, and governance disciplines?

Drivers and Challenges of AI Growth

The undisputable #1 driver of AI growth is its monetization potential. International Data Corporation (IDC) projects global spending on AI and cognitive tech in 2025 to reach $204 billion, as 90% of commercial products will embrace AI components. According to Market & Markets research data, the projected annual revenue attributed to AI in 2025 will reach 119 billion. PricewaterhouseCoopers (PWC) projects the AI market valuation to be close to $16 trillion. On the other hand, according to Will Oremus of the Washington Post “AI chatbots lose money every time you use them” [1]. Oremus argues that the best quality models are too expensive to run in models financed by cheap, freemium subscriptions. The trade-off between model scope, performance, and cost might be one of the key factors influencing the monetization of AI-based capabilities and, therefore, affecting AI growth. Therefore, when charting a map for the future scenarios of AI evolution, we need to go beyond AI tools and techniques and focus on motivations that drive the applications of powerful and complex architectures and lead to sustainable business models.

Another factor that will influence AI growth is the regulation of AI. The recent EU AI act will, for example, outright ban AI-based solutions that represent unacceptable human rights risks, such as social scoring systems. It will also impose strict rules on data governance, detailed documentation, human oversight, transparency, robustness, or accuracy on high-risk solutions, such as applications related to critical infrastructure operations, health services, or autonomous transport.

The third factor that will affect AI growth is the level of industrialization – the capability to scale the design, delivery, and support processes for AI-based solutions responsibly and cost-effectively. Current research shows that this area is in acute need of improvement. According to the “AI as a System Technology” report [2], AI teams often prioritize short-term solutions, such as “code that works”, over technical safeguards seen commonly in other fields of “responsible engineering. Stress testing is rare, and there is little to no evidence of applying adequate risk margins to machine learning (ML) systems, redundancy in critical components providing fallback options, and fail-safe functions.

Industrialization of AI is a necessity as the AI talent supply lags behind demand for roles driving the current, mostly experimentation-driven AI supply chain. According to McKinsey's “2023 Technology Trends Outlook” the availability of general ML skills covers barely a third of job posting, while for specific AI technology platforms, such as Keras this coverage is less than 5% [3]. To materialize the monetization expectations, AI product vendors must upskill their general IT talent – architects, designers, developers, and devops engineers. Model development and testing automation concepts such as machine learning operations (ML Ops) are essential tools for upskilling and making generic backend/frontend engineers productive in AI. However, to fully address the complex, interdependent challenges mentioned in the previous section, the AI proponents, policymakers, and practitioners need to implement comprehensive, pragmatic tools and methods in the form of responsible AI process frameworks. Such frameworks should support the conceptualization of value propositions, architecture design, risk management for AI-based products and projects, as well as best practices related to feature design, development and delivery, aligned with responsible/trustworthy design principles.

AI Frameworks Abundance Paradox

Obviously, no single responsible AI framework uniformly covers this entire spectrum of business, governance and technology issues. Most organizations need to adapt and integrate a number of frameworks that reflects the complexity of their AI products and services. Alas, such integration is not simple, as there is little constructive alignment between existing frameworks addressing responsible and trustworthy AI. Research published in June 2023 by Center of Security and Emerging Technology (policy research organization affiliated with Georgetown University) presenting the result of analysis of over 40 responsible AI frameworks shows that “these frameworks are currently scattered and difficult to compare.” [4]. The authors advocate a user-centric approach for framework selection and adaptation, focusing on delivery (development and production), and governance teams. They support their concept with 3 use cases: (1) establishing sustainable practices for building ethical AI by the CEO, (2) documenting the ML model by the Data Scientist, and (3) defining data protection policy by the legal team. While constructive and pragmatic, this approach does little to help overcome the key challenge of principles/outcomes alignment: the lack of joint ownership of design concepts and product values between solution proponents (investors, product owners), governance teams, and development teams. Such shared ownership is at the heart of a truly responsible approach.

It should be stressed here that in other areas of IT the alignment between governance, business value, architecture and delivery are much better aligned and supported by specialized frameworks and best practices. Take service governance, service design, service-oriented architecture and service management as one example. Or data governance, data-driven organization, business intelligence architecture patterns and processes as another. In both cases there is a common vocabulary, defined and well-aligned processes that enable efficient and effective collaboration of governance, business, development, and delivery teams. Paradoxically – while we have an abundance of responsible AI frameworks and guidelines, they are not very effective in supporting the emergence of responsible AI engineering and responsible AI growth.

GPAI CAST project is on the mission to provide at least a partial remedy to this situation.

Navigating AI Evolution with CAST

The CAST AI design framework, developed by the GPAI Future of Work working group, offers support for three fundamental use cases: (1) AI-based product/solution conceptualization and design (2) identification of product/project principles and best practices (3) evaluation of AI-based products and solutions from the perspective of responsible AI principles. CAST resembles Framework Matrix by taking advantage of existing best practices where they are well established while adding a number of AI-specific design heuristics and patterns. The major CAST differentiator stems from the underlying model of “smart solutions” which helps navigate and integrate product features, design practices, and principles of trustworthy and responsible AI in the context of product modus operandi and motivation. Also, CAST ambition is to provide its framework as an open, interactive online resource developed by a community of AI practitioners.

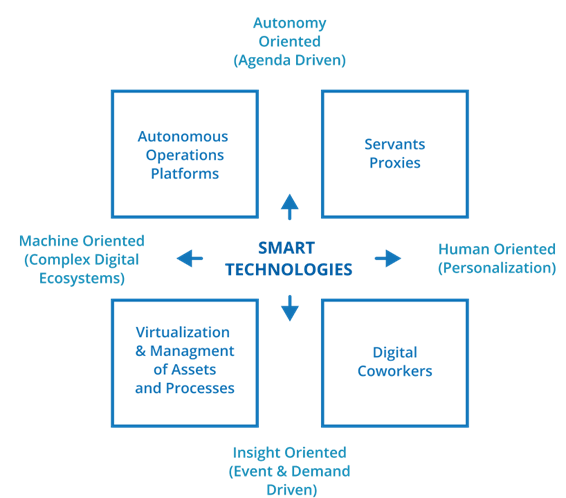

The CAST model is based on business capabilities rather than AI techniques. Business capabilities are essential building blocks for B2C as well as B2B value propositions, which helps to keep focus on human needs when charting innovations using specific AI tools and techniques (jointly labelled as “smart technologies” on the CAST model). The following picture represents high level view of CAST solution categories:

The capabilities follow the key directions and dimensions across which the application of AI evolves. One clear direction is enhancing human cognitive productivity with insights or relevant creative or semi-creative content. This solution category – “Digital Coworkers” – has arguably the highest visibility, inflated expectations, and public concerns due the recent introduction of pervasive Generative AI tools. But it is not – by far – the only direction in which AI can take us.

A direction that has at least equal potential of transforming work and society is the direction of autonomous solutions. Autonomy can be applied to tasks or processes humans perform (such as driving a vehicle, moving materials around a warehouse or factory line, or assisting patients with mobility impairments). There is a whole, large category of services performed autonomously by machines (and software) including new, autonomous modus operandi for financial management, facility management or retail services. But this category of solutions, named “Servant Proxies” to stress their focus on serving human recipients (users) is not where autonomy ends. In the growing internet of things, with tens of billions of devices ranging from simple sensors to complex edge devices we need machines to take care of machines. Such autonomous operations are the future of large-scale manufacturing, transport, logistics, infrastructure, agriculture, and food processing.

Just as “digital coworkers” enable human productivity with insights and valuable content, digital ecosystems also require insight-oriented services to enable efficient component interoperability, coordination and planning, or failure prevention. This is the category of solutions based on the concept of “digital twins” – building virtual models of assets and processes to provide insights that can be used to achieve robustness, fail-safeness and efficiency of complex digital ecosystems.

Combining these four solution categories creates a map that can be used for AI solution conceptualization, roadmapping or evaluation. Moreover, the CAST model links capabilities such as generative insights or execution autonomy to design heuristics and patterns, as well as responsible AI principles that can provide support to solution architects and product owners in shaping their design decisions and backlogs in a way that leads to responsible, robust and well-architected solutions.

Conclusion

Industries, such as transport or pharma have adopted standard based, regulatory systems that balance the innovation, and engineering with public interest. Digital industry is lacking a shared definition of what constitutes “AI proper”, or broadly accepted AI governance, design and engineering practices. Existing frameworks are scattered and poorly aligned.

As AI components will be present in large majority of B2B and B2C products and services, including critical infrastructure, the digital industry needs tools that will improve the alignment and integrate existing best practices into a broadly accessible body of knowledge. Such body of knowledge is needed to help remedy the shortage of AI talent and make ethical design of AI products a shared responsibility of governance, management and technology teams.

References

1. Oremus, W., AI chatbots lose money every time you use them, Washington Post, June 5th, 2023, https://www.washingtonpost.com/technology/2023/06/05/chatgpt-hidden-cost-gpu-compute/

2. Sheikh, H., Prins, C., Schrijvers, E. (2023). Mission AI. Research for Policy. Springer, Cham. https://rdcu.be/du4Hs

3. Technology Trends Outlook – 2023, McKinsey publication, July 2023, https://www.mckinsey.com/~/media/mckinsey/business%20functions/mckinsey%20digital/our%20insights/mckinsey%20technology%20trends%20outlook%202023/mckinsey-technology-trends-outlook-2023-v5.pdf

4. Narayanan, M., Schoeberl, Ch., A Matrix for Selecting Responsible AI Frameworks, CSET Report, June 2023, https://cset.georgetown.edu/publication/a-matrix-for-selecting-responsible-ai-frameworks/

5. Stokalski, B., Kamiński B., Kaszyński, D., Kroplewski, R., CAST. Constructive Approach to Smart Technologies, GPAI Publication, https://gpai.ai/projects/future-of-work/FoW3_CAST.pdf

uploaded 5 February 2024

Related Documents